•••

TL;DR: If you want a simple template/boilerplate to get you started with TypeScript + Node.js, clone this repo.

As I started spending more time writing JavaScript, the more I missed a stricter type-system to lean on. TypeScript seemed like a great fit, but coming from Go, JavaScript/TypeScript projects require a ton of configuration to get started. Knowing what dependencies you need (typescript, tslint, node-ts), linter configuration (tslint.json), and putting together the right tsconfig.json for a Node app (vs. a browser app) wasn’t well documented. I wanted to compile to ES6, use CommonJS modules (what Node.js consumes), and generate type definitions alongside the .js files so that editors like VS Code (or other TypeScript authors) can benefit.

After doing this for a couple of small projects, I figured I’d had enough, and put together node-typescript-starter, a minimal-but-opinionated configuration for TypeScript + Node.js. It’s easy enough to change things, but it should provide a basis for writing code rather than configuration.

To get started, just clone the repo and write some TypeScript:

git clone https://github.com/elithrar/node-typescript-starter.git

# Then: replace what you need to in package.json, update the LICENSE file

yarn install # or npm install

# Start writing TypeScript!

open src/App.ts

… and then build it:

yarn build

# Outputs:

# yarn build v0.22.0

# $ tsc

# ✨ Done in 2.89s.

And that’s it. PR’s are welcome, keeping in mind the intentionally minimal approach.

•••

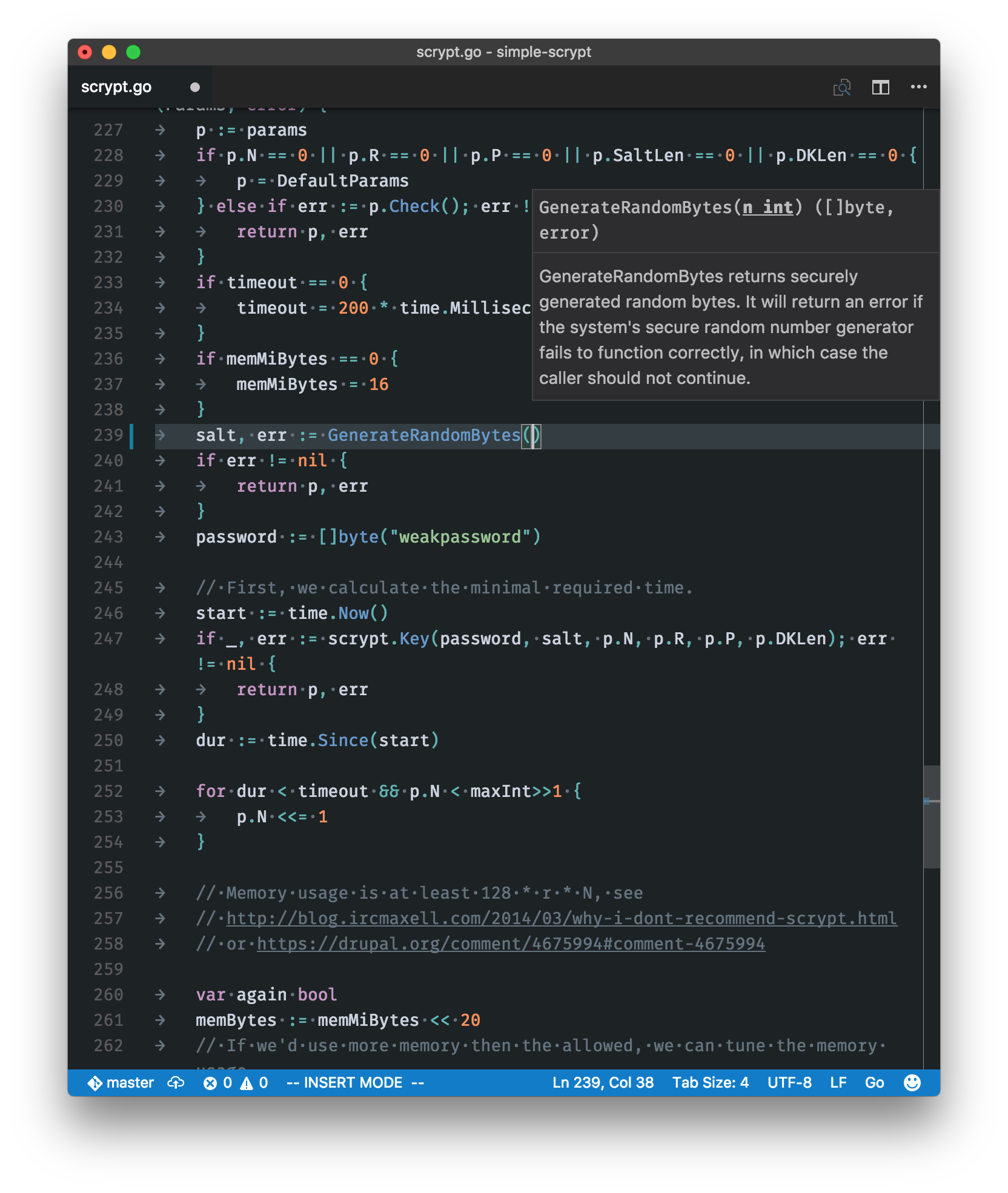

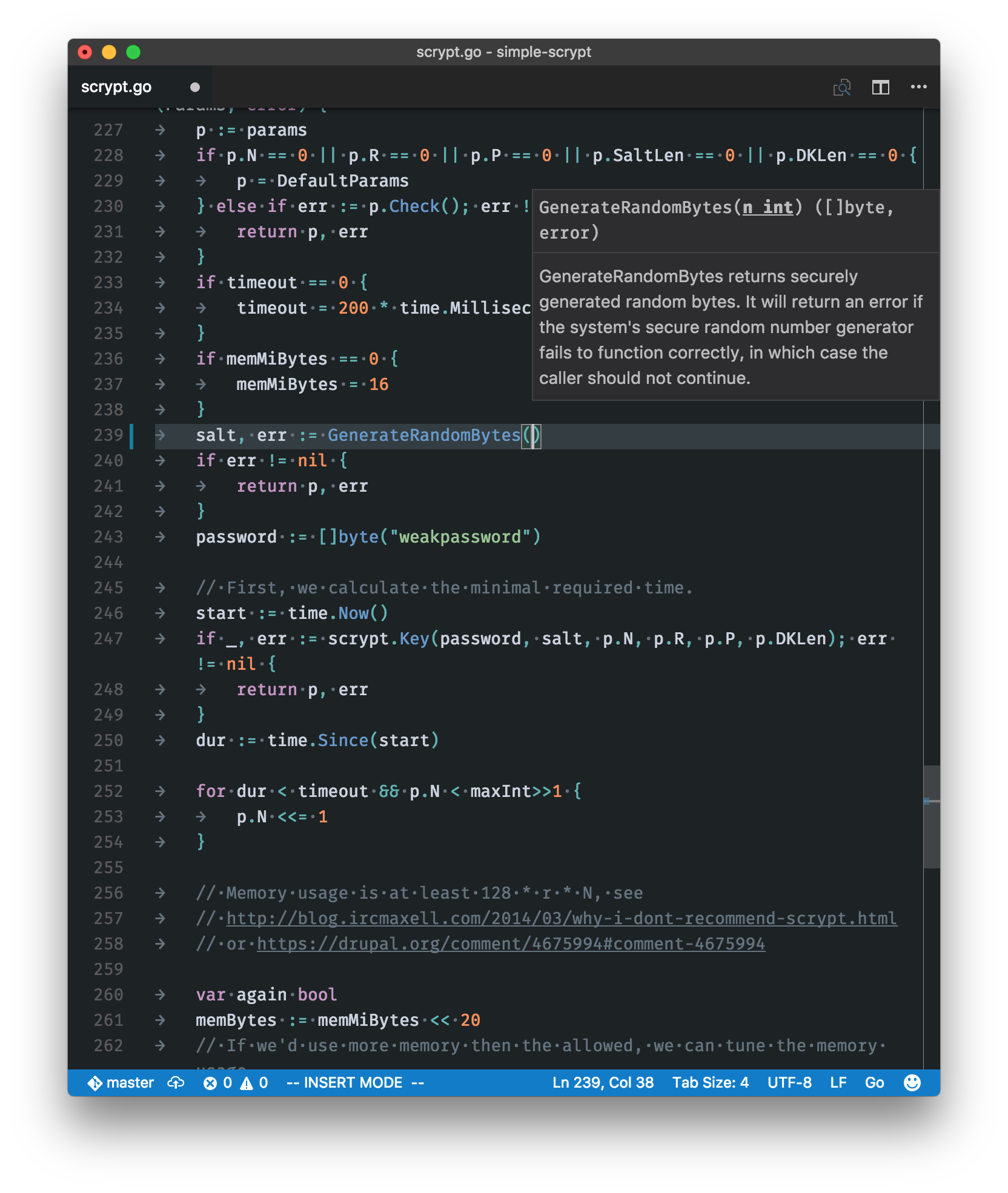

I’ve been using vim for a while now, but the recent noise around Visual Studio Code had me curious, especially given some long-running frustrations with vim. I have a fairly comprehensive vim config, but it often feels fragile. vim-go, YouCompleteMe & ctags sit on top of vim to provide autocompletion: but re-compiling libraries, dealing with RPC issues and keeping it working when you just want to write code can be Not Fun. Adding proper autocompletion for additional languages—like Python and Ruby—is an exercise in patience (and spare time).

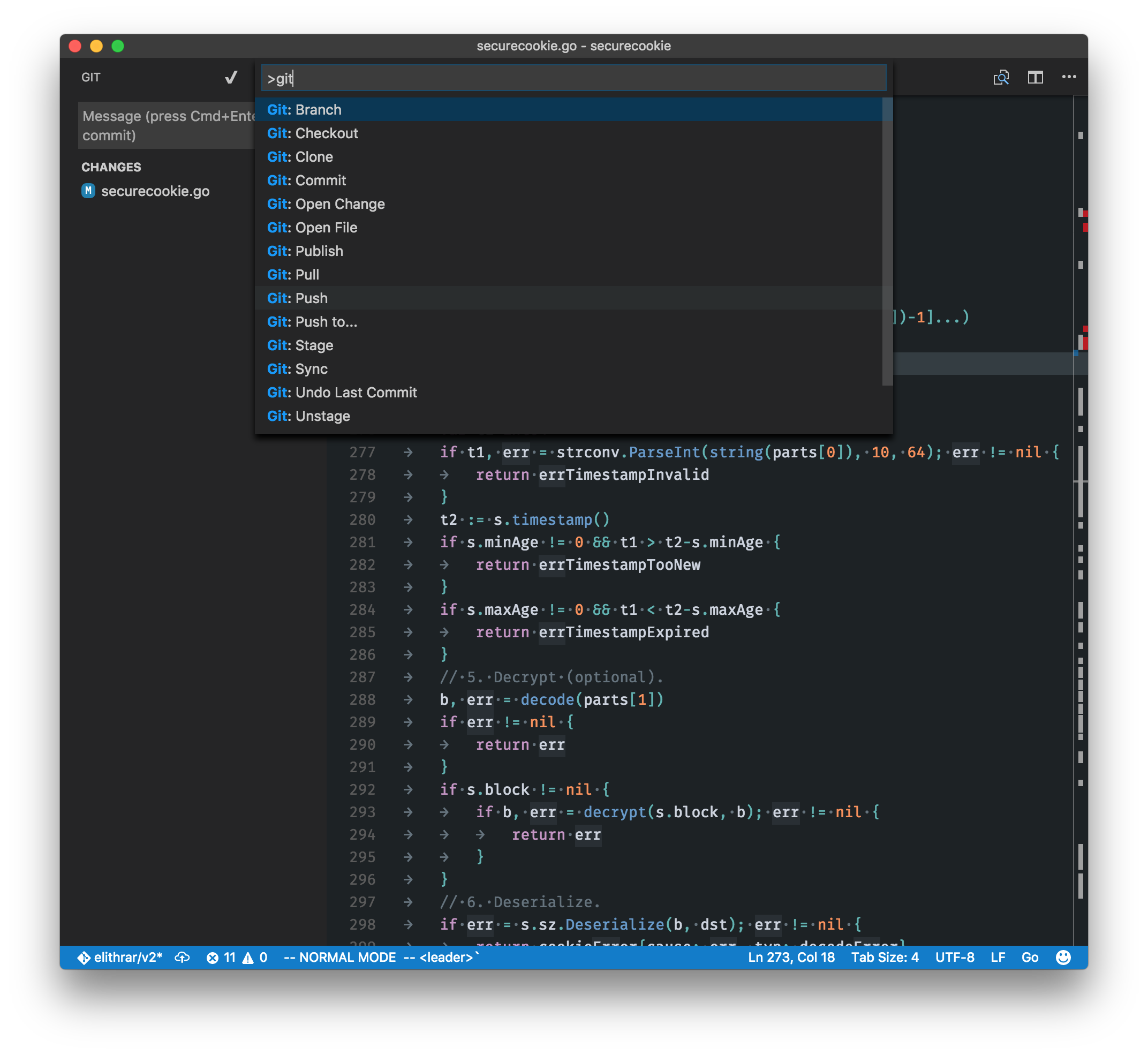

VS Code, on the other hand, is pretty polished out of the box: autocompletion is significantly more robust (and tooltips are richer), adding additional languages is extremely straightforward. If you write a lot of JavaScript/Babel or TypeScript, then it has some serious advantages (JS-family support is first-class). And despite the name, Visual Studio Code (“VS Code”) doesn’t bear much resemblance to Visual Studio proper. Instead, it’s most similar to GitHub’s Atom editor: more text editor than full-blown IDE, but with a rich extensions interface that allows you to turn it into an IDE depending on what language(s) you hack on.

Thus, I decided to run with VS Code for a month to see whether I could live with it. I’ve read articles by those switching from editors like Atom, but nothing on a hard-swap from vim. To do this properly, I went all in:

- I aliased

vim to code in my shell (bad habits and all that)

- Changed my shell’s

$EDITOR to code

- Set

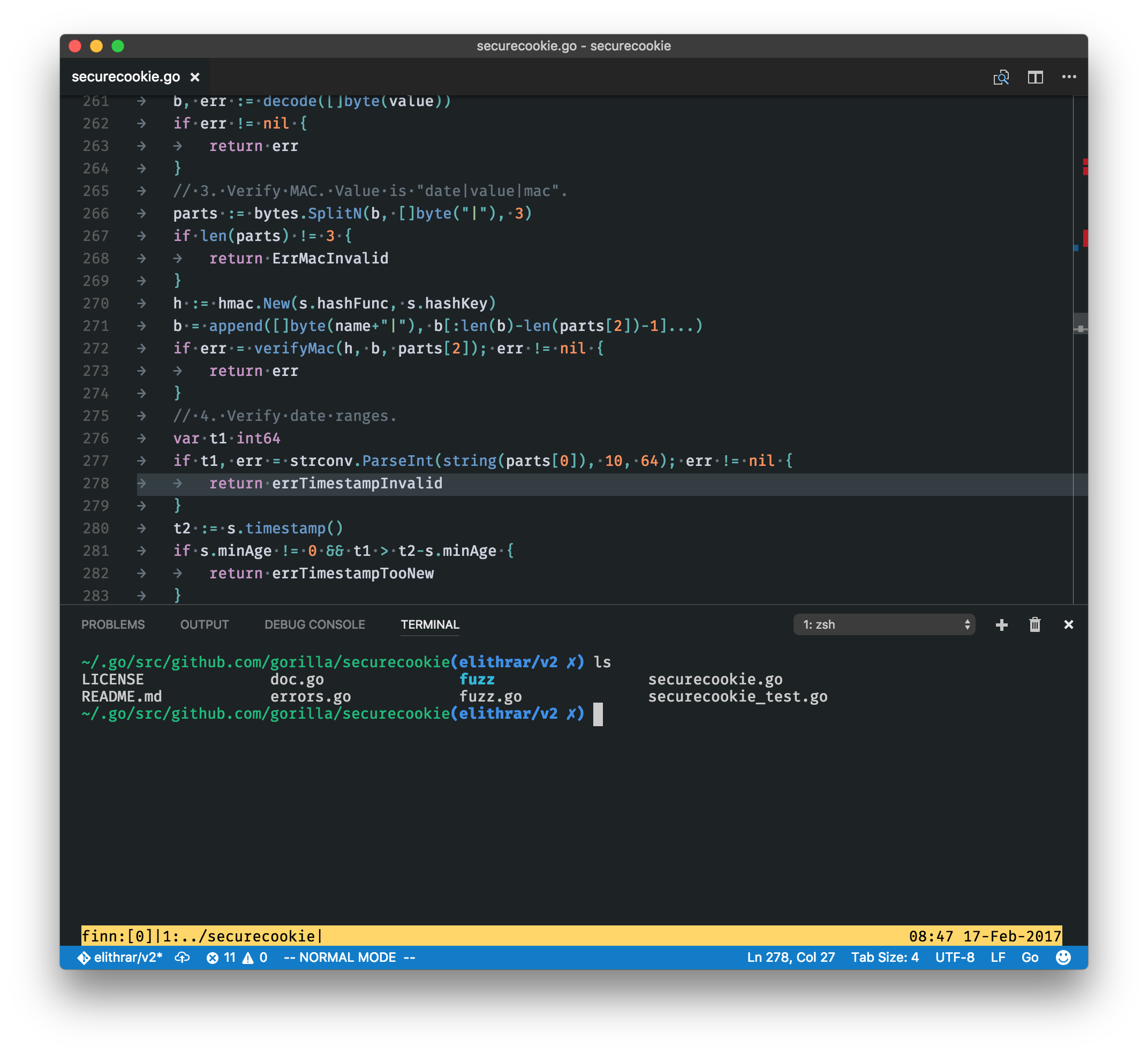

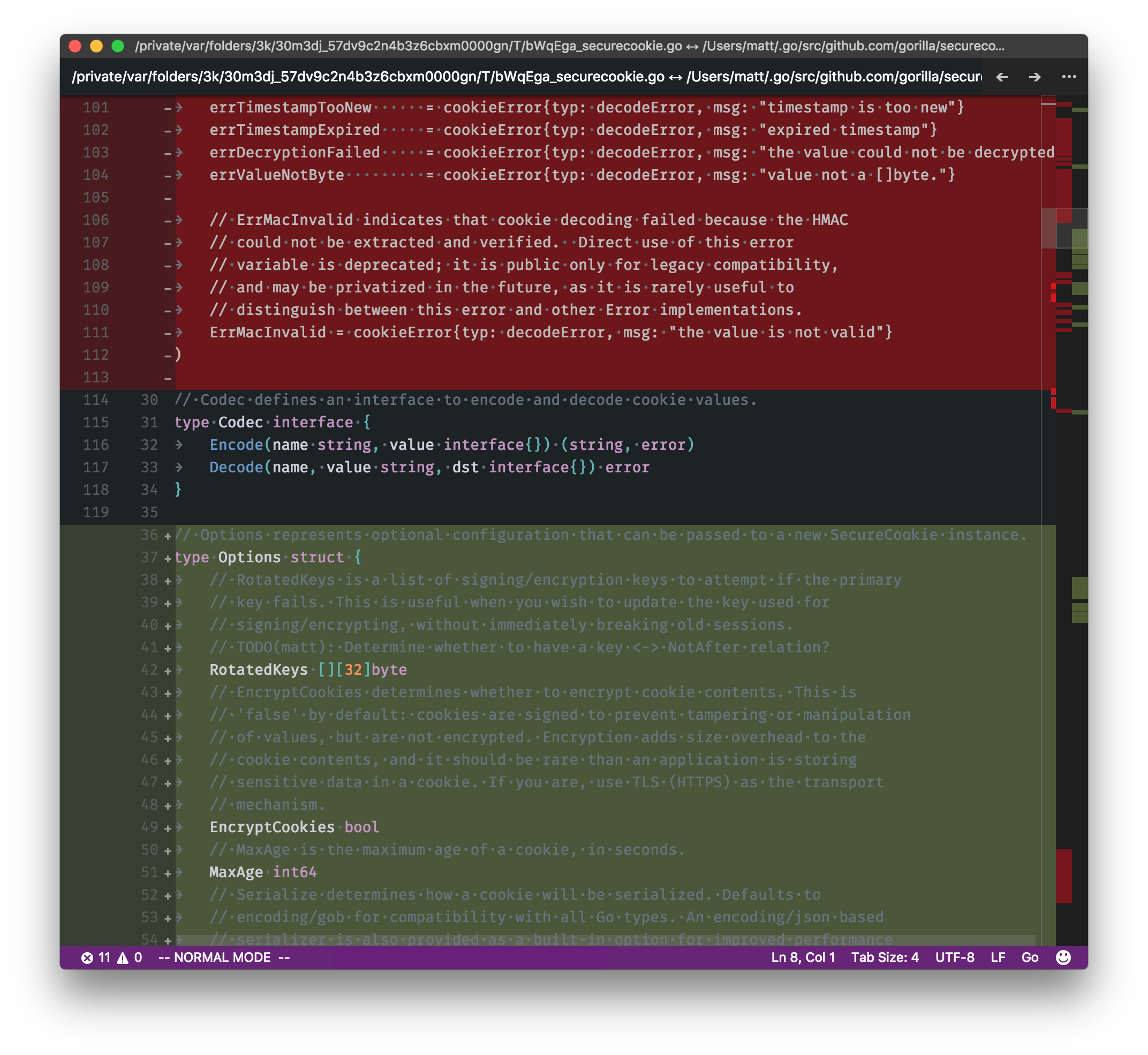

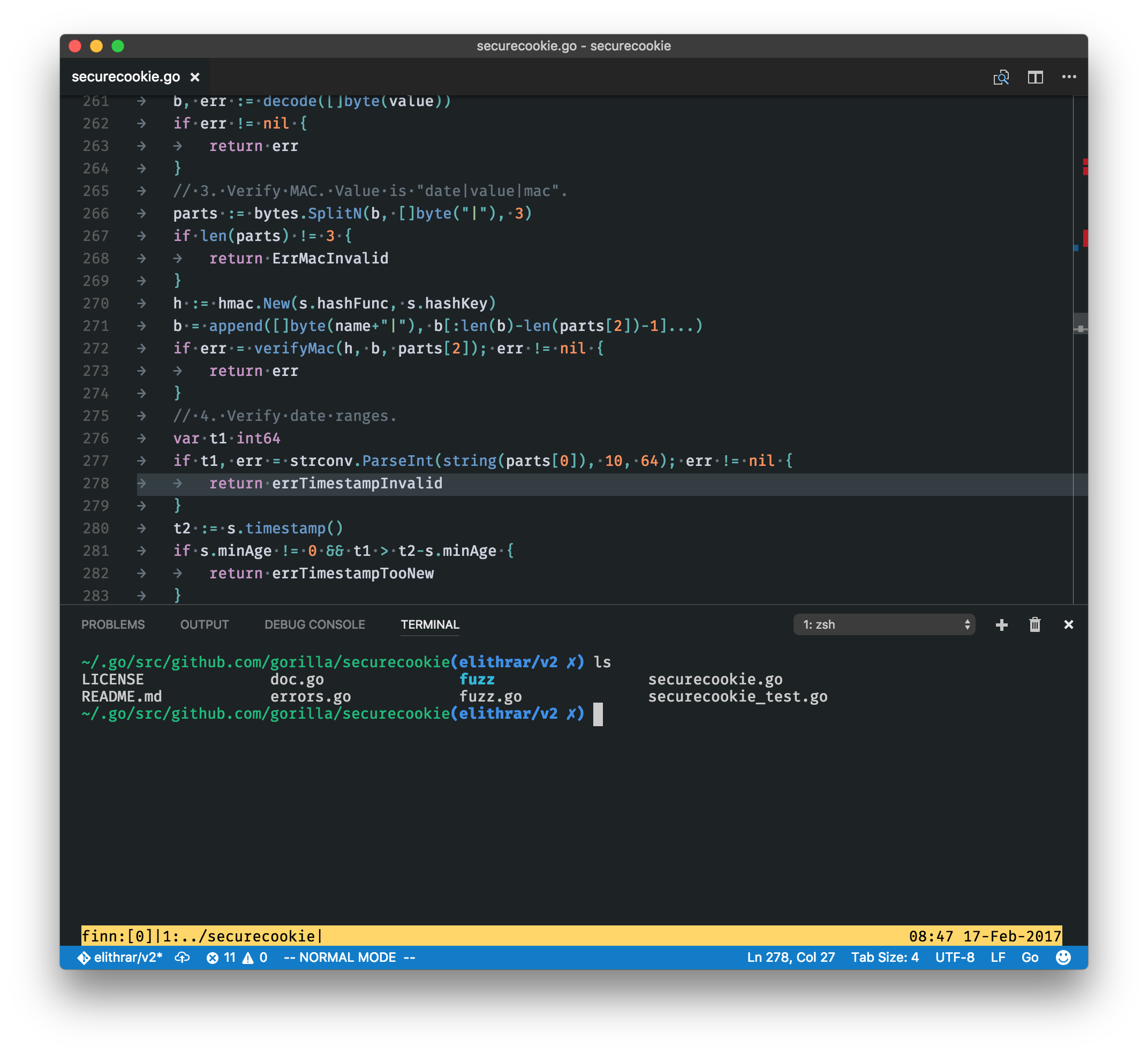

git difftool to use code --wait --diff $LOCAL $REMOTE.

Note that I still used vim keybindings via VSCodeVim. I can’t imagine programming another way.

Notes

Worth noting before you read on:

- When I say “vim” I specifically mean neovim: I hard-switched sometime late in 2015 (it was easy) and haven’t looked back.

- I write a lot of Go, some Python, Bash & and ‘enough’ JavaScript (primarily Vue.js), so my thoughts are going to be colored by the workflows around these languages.

- vim-go single-handedly gives vim a productivity advantage, but vscode-go isn’t too far behind. The open-godoc-in-a-vim-split (and generally better split usage) of vim-go is probably what wins out

Saying that, the autocompletion in vscode-go is richer and clearer, thanks to VS Code’s better autocompletion as-a-whole, and will get better.

Workflow

Throughout this period, I realised I had two distinct ways of using an editor, each prioritizing different things:

- Short, quick edits. Has to launch fast. This is typically anything that uses

$EDITOR (git commit/rebase), short scripts, and quickly manipulating data (visual block mode + regex work well for this).

- Whole projects. Must manage editing/creating multiple files, provide Git integration, debugging across library boundaries, and running tests.

Lots of overlap, but it should be obvious where I care about launch speed vs. file management vs. deeper language support.

Short Edits

Observations:

- VS Code’s startup speed isn’t icicle-like (think: early Atom), but it’s still slow, especially from a cold-start. ~5 seconds from

code $filename to a text-rendered-and-extensions-loaded usable, which is about twice that of a plugin-laden neovim.

- Actual in-editor performance is good: command responsiveness, changing tabs, and jumping to declarations never feels slow.

- If you’ve started in the shell, switching to another application to edit a file or modify a multi-line shell command can feel a little clunky. I’d typically handle this by opening a new tmux split (retaining a prompt where I needed it) and then using vim to edit what I needed.

Despite these things, it’s still capable of these tasks. vim just had a huge head-start, and is most at home in a terminal-based environment.

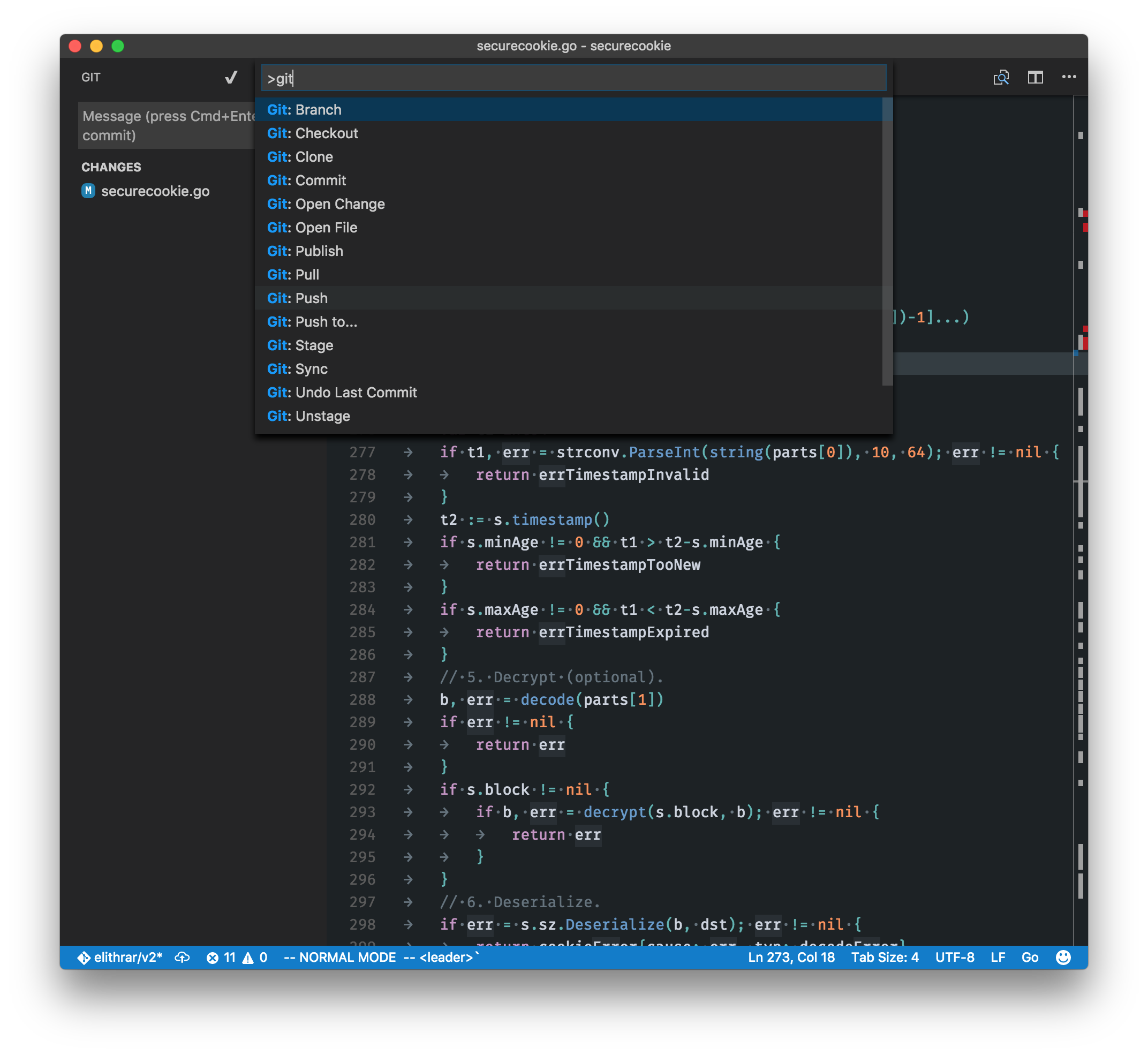

Whole Projects

VS Code is really good here, and I think whole-project workflows are its strength, but it’s not perfect.

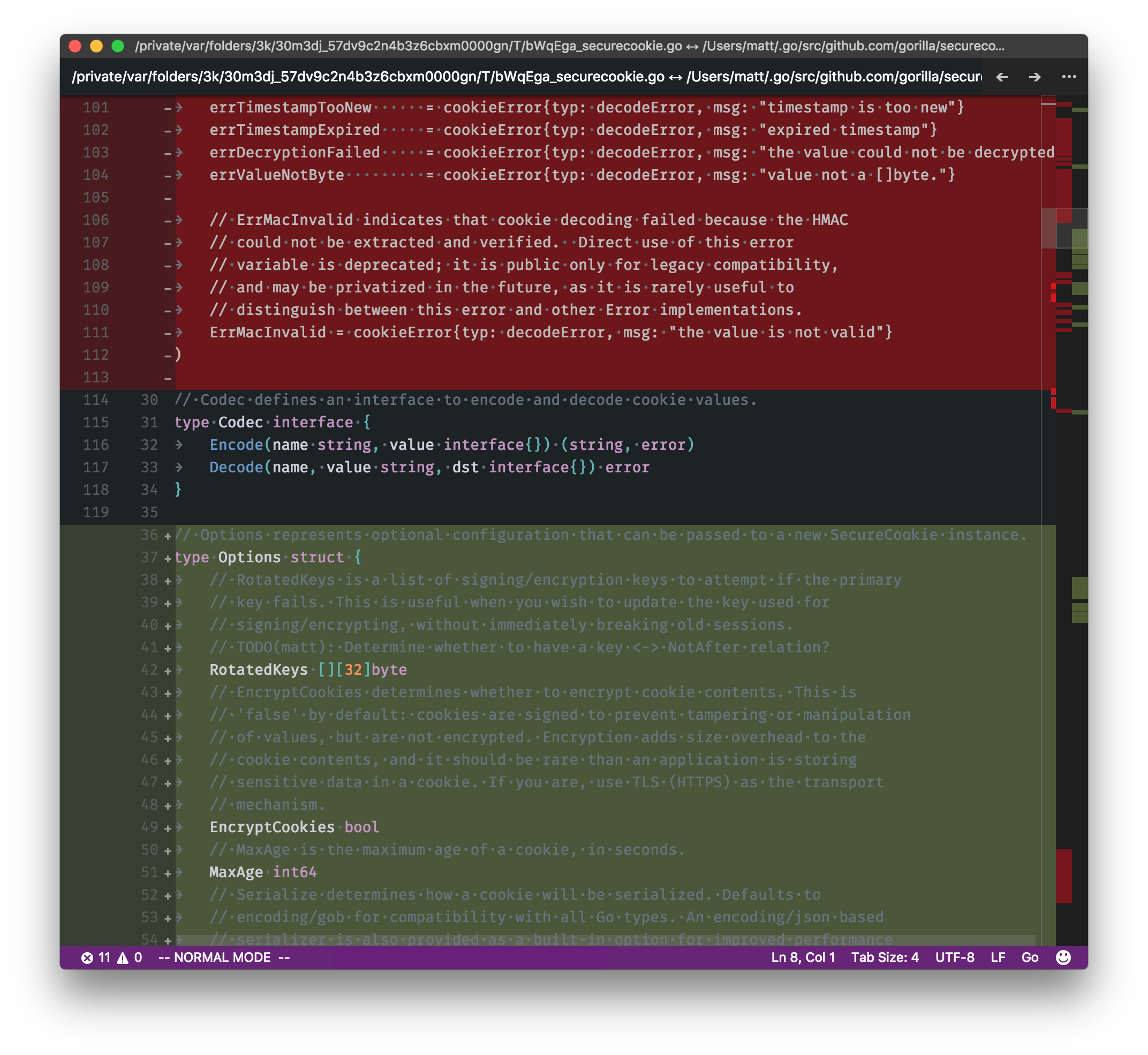

- The built-in Git support rivals vim-fugitive, moving between splits/buffers is fast, and it’s easy enough to hide. The default side-by-side diffs look good, although you don’t have as many tools to do a 3-way merge (via

:bufget, etc) as you do with vim-fugitive.

- Find-in-files is quick, although I miss some of the power of

ag.vim, which hooks into my favorite grep replacement, the-silver-searcher.

- What I miss from NERDTree is the ability to search it just like a buffer:

/filename is incredibly useful on larger projects with more complex directory structures (looking at you, JS frameworks!). You’re also not able to navigate the filesystem up from the directory you opened, although I did see an issue for this and a plan for improvement.

I should note that opening a directory in VS Code triggers a full reload, which can be a little disruptive.

Other Things

There’s a bunch of smaller things that, whilst unimportant in the scope of getting things done, are still noticeable:

- Font rendering. If you’re on macOS (nee OS X), then you’ll notice that VS Code’s font rendering (Chromium’s font rendering) is a little different from your regular terminal or other applications. Not worse; just different.

- Tab switching: good! Fast, and there’s support for vim commands like

:vsp to open files in splits.

- You can use most of vim’s substitution syntax:

:s/before/after/(g) works as expected. gc (for confirmation) doesn’t appear to work.

- EasyMotion support is included in the VSCodeVim plugin: although I’m a vim-sneak user, EasyMotion is arguably more popular among vim users and serves the same overall goals (navigating short to medium distances quickly).

<leader><leader>f<char> (in my config) allows me to easily search forwards by character.

- The native terminal needs a bunch of work to make me happy. It’s based on xterm.js, which could do with a lot more love if VS Code is going to tie itself to it. It just landed support for hyperlinks (in VS Code 1.9), but solid tmux support is still lacking and makes spending time in the terminal feel like a chore vs. iTerm.

- Configuration: I aliased

keybindings.json and settings.json into my dotfiles repository, so I can effectively sync them across machines like .vimrc. Beyond that: VS Code is highly configurable, and although writing out JSON can be tedious, it does a lot to make changing default settings as easy as possible for you (autocompletion of settings is a nice touch).

You might also be asking: what about connecting to other machines remotely, where you only have an unadorned vim on the remote machine? That wasn’t a problem with vim thanks to the netrw plugin—you would connect to/browse the remote filesystem with your local, customized vim install—but is a shortcoming with VS Code. I wasn’t able to find a robust extension that would let me do this, although (in my case) it’s increasingly rare to SSH into a box given how software is deployed now. Still, worth keeping in mind if vim scp://user@host:/path/to/script.sh is part of your regular workflow.

So, Which Editor?

I like VS Code a lot. Many of the things that frustate me are things that can be fixed, although I suspect improving startup speed will be tough (loading a browser engine underneath and all). Had I tried it 6+ months ago it’s likely I would have stuck with vim. Now, the decision is much harder.

I’m going to stick it out, because for the language I write the most (Go), the excellent autocompletion and toolchain integration beat out the other parts. If you are seeking a modern editor with 1:1 feature parity with vim, then look elsewhere: each editor brings its own things to the table, and you’ll never be pleased if you’re seeking that.

•••

In a migration of an internal admin dashboard from Vue 1 to Vue 2 (my JS framework of choice), two-way filters were deprecated. I’d been using two-way filters to format JSON data (stringifying it) and parsing user-input (strings) back to the original data type so that the dashboard wouldn’t need to know about the (often changing) schema of the API at the time.

In the process of re-writing the filter as a custom form input component, it needed to:

- Be able to check the type of the data it was handling, and validate input against that type (if it was a Number, then Strings are invalid)

- Be unaware the type of the data ahead-of-time (the API was in-flux, and I wanted it to adaptive)

- Format more complex types (Objects/Arrays) appropriately for a form field.

Custom input components accept a value prop and emit an input event via the familiar v-model directive in Vue. Customising what happens in-between is where the value of writing your own input implementation comes in:

<tr v-for="(val, key) in item">

<td class="label"></td>

<td>

<json-input :label=key v-model="item[key]"></json-input>

</td>

</tr>

The parent component otherwise passes values to this component in v-model just like any other.

The Code

Although this is written as a single-file component, I’ve broken it down into two pieces.

The template section of the component is fairly straightforward:

<template>

<input

ref="input"

v-bind:class="{ dirty: isDirty }"

v-bind:value="format(value)"

v-on:input="parse($event.target.value)"

>

</template>

The key parts are v-bind:value (what we emit) and v-on:input (the input). The ref="input" attribute allows us to emit events via the this.$emit(ref, data) API.

Lodash includes well-tested type-checking functions: I use these for the initial checks instead of reinventing the wheel. Notably, isPlainObject should be preferred over isObject, as the latter has a broader meaning. I also use debounce to add a short delay to the input -> parse function call, so that we’re not overly aggressive about saying ‘invalid’ before the user has a chance to correct typos.

<script>

import debounce from "lodash.debounce"

import { isBoolean, isString, isPlainObject, isArrayLikeObject, isNumber, isFinite, toNumber } from "lodash"

export default {

name: "json-input",

props: {

// The form label/key

label: {

type: String,

required: true

},

// The form value

value: {

required: true

}

},

data () {

return {

// dirty is true if the type of the field doesn't match the original

// value passed.

dirty: false,

// typeChecked is true when the type of the original value has been

// checked. This allows us to validate user-input against the original

// (expected) type.

typeChecked: false,

isObject: false,

isBoolean: false,

isNumber: false,

isString: false

}

},

computed: {

isDirty: function () {

return this.dirty

}

},

methods: {

// init determines the JS type of the field (once) during initialization.

init: function () {

this.typeChecked = false

this.isObject = false

this.isBoolean = false

this.isNumber = false

this.isString = false

if (isPlainObject(this.value) || isArrayLikeObject(this.value)) {

this.isObject = true

} else if (isNumber(this.value)) {

this.isNumber = true

} else if (isBoolean(this.value)) {

this.isBoolean = true

} else if (isString(this.value)) {

this.isString = true

}

this.typeChecked = true

},

// format returns a formatted value based on its type; Objects are

// JSON.stringify'ed, and Boolean & Number values are noted to prevent

// reading them back as strings.

format: function () {

// Check the types of our fields on the initial format.

if (!this.typeChecked) {

this.init()

}

var res

if (this.isObject) {

res = JSON.stringify(this.value)

} else if (this.isNumber) {

res = this.value

} else if (this.isBoolean) {

res = this.value

} else if (this.isString) {

res = this.value

} else {

res = JSON.stringify(this.value)

}

return res

},

// Based on custom component events from

// https://vuejs.org/v2/guide/components.html#Form-Input-Components-using-Custom-Events

parse: debounce(function (value) {

this.dirty = false

if (this.isObject) {

var res

try {

res = JSON.parse(value)

this.$emit("input", this.format(res))

} catch (e) {

// Mark the field as dirty.

this.dirty = true

res = value

}

this.$emit("input", res)

return

}

// Check the original type of the value; if the user-input does not conform

// flag the field as dirty.

if (this.isBoolean) {

if (value === "true" || value === "false") {

this.dirty = false

// Convert back to a Boolean.

this.$emit("input", (value === "true"))

return

}

this.dirty = true

this.$emit("input", value)

return

} else if (this.isNumber) {

// Convert numbers back to numbers.

let num = toNumber(value)

if (isNumber(num) && isFinite(num)) {

this.$emit("input", num)

return

}

this.dirty = true

this.$emit("input", value)

return

} else {

// Write other types as-is.

this.$emit("input", value)

return

}

}, 1000)

}

}

</script>

There’s a reasonable amount to digest here, but it makes sense if you think of it in three steps:

init() - called on the initial format only. It type-checks the initial data, and sets typeChecked = true so we don’t run this again for the life of the component. The Lodash functions we import simplify this for us.format() - this method is responsible for emitting the value (e.g. to the DOM): it stringifies objects, converts any number back to a Number proper, etc.parse() - validates all user input against that initial type we asserted in the init method. If the user input is invalid, we set this.dirty = true (and add a CSS class of ‘dirty’) and emit the invalid value as-is, for the user to correct. TODO: return “input should be a Number” as a helpful error.

Steps #2 and #3 are universal to any custom form input component: how the data comes in, and how it goes out. This doesn’t just apply to <input> either: you could easily write your own <select> or <textarea> component by adapting this approach.

Wrap

Here’s a working demo: enter a non-Number value into the input and it’ll flag it appropriately. Change the value/type of the data in the parent Vue instance, re-run it, and you’ll see the component validate the new type automatically.

•••

It’s 2016. You’re about to tie together a Popular Front-End JavaScript framework with a web service

written in Go, but you’re also looking for a way to have Go serve the static files as well as your

REST API. You want to:

- Serve your

/api/ routes from your Go service

- Also have it serve the static content for your application (e.g. your JS bundle, CSS, assets)

- Any other route will serve the

index.html entrypoint, so that deep-linking into your JavaScript

application still works when using a front-end router - e.g.

vue-router,

react-router, Ember’s routing.

Here’s how.

The Folder Layout

Here’s a fairly simple folder layout: we have a simple Vue.js application

sitting alongside a Go service. Our Go’s main() is contained in serve.go, with the datastore

interface and handlers inside datastore/ and handlers/, respectively.

~ gorilla-vue tree -L 1

.

├── README.md

├── datastore

├── dist

├── handlers

├── index.html

├── node_modules

├── package.json

├── serve.go

├── src

└── webpack.config.js

~ gorilla-vue tree -L 1 dist

dist

├── build.js

└── build.js.map

With this in mind, let’s see how we can serve index.html and the contents of our dist/

directory.

Note: If you’re looking for tips on how to structure a Go service, read through

@benbjohnson’s excellent Gophercon 2016

talk.

Serving Your JavaScript Entrypoint.

The example below uses gorilla/mux, but you can achieve this with

vanilla net/http or

httprouter, too.

The main takeaway is the combination of a catchall route and http.ServeFile, which effectively

serves our index.html for any unknown routes (instead of 404’ing). This allows something like

example.com/deep-link to still run your JS application, letting it handle the route explicitly.

package main

import (

"encoding/json"

"flag"

"net/http"

"os"

"time"

"log"

"github.com/gorilla/handlers"

"github.com/gorilla/mux"

)

func main() {

var entry string

var static string

var port string

flag.StringVar(&entry, "entry", "./index.html", "the entrypoint to serve.")

flag.StringVar(&static, "static", ".", "the directory to serve static files from.")

flag.StringVar(&port, "port", "8000", "the `port` to listen on.")

flag.Parse()

r := mux.NewRouter()

// Note: In a larger application, we'd likely extract our route-building logic into our handlers

// package, given the coupling between them.

// It's important that this is before your catch-all route ("/")

api := r.PathPrefix("/api/v1/").Subrouter()

api.HandleFunc("/users", GetUsersHandler).Methods("GET")

// Optional: Use a custom 404 handler for our API paths.

// api.NotFoundHandler = JSONNotFound

// Serve static assets directly.

r.PathPrefix("/dist").Handler(http.FileServer(http.Dir(static)))

// Catch-all: Serve our JavaScript application's entry-point (index.html).

r.PathPrefix("/").HandlerFunc(IndexHandler(entry))

srv := &http.Server{

Handler: handlers.LoggingHandler(os.Stdout, r),

Addr: "127.0.0.1:" + port,

// Good practice: enforce timeouts for servers you create!

WriteTimeout: 15 * time.Second,

ReadTimeout: 15 * time.Second,

}

log.Fatal(srv.ListenAndServe())

}

func IndexHandler(entrypoint string) func(w http.ResponseWriter, r *http.Request) {

fn := func(w http.ResponseWriter, r *http.Request) {

http.ServeFile(w, r, entrypoint)

}

return http.HandlerFunc(fn)

}

func GetUsersHandler(w http.ResponseWriter, r *http.Request) {

data := map[string]interface{}{

"id": "12345",

"ts": time.Now().Format(time.RFC3339),

}

b, err := json.Marshal(data)

if err != nil {

http.Error(w, err.Error(), 400)

return

}

w.Write(b)

}

Build that, and then run it, specifying where to find the files:

go build serve.go

./serve -entry=~/gorilla-vue/index.html -static=~/gorilla-vue/dist/

You can see an example app live here

Summary

That’s it! It’s pretty simple to get this up and running, and there’s already a few ‘next steps’ we

could take: some useful caching middleware for setting Cache-Control headers when serving our

static content or index.html or using Go’s html/template

package to render the initial index.html (adding a CSRF meta tag, injecting hashed asset URLs).

If something is non-obvious and/or you get stuck, reach out via

Twitter.

•••

You’re building a web (HTTP) service in Go, and you want to unit test your handler functions. You’ve

got a grip on Go’s net/http package, but you’re not sure where

to start with testing that your handlers return the correct HTTP status codes, HTTP headers or

response bodies.

Let’s walk through how you go about this, injecting the necessary dependencies, and mocking the

rest.

A Basic Handler

We’ll start by writing a basic test: we want to make sure our handler returns a HTTP 200 (OK) status code. This is our handler:

// handlers.go

package handlers

// e.g. http.HandleFunc("/health-check", HealthCheckHandler)

func HealthCheckHandler(w http.ResponseWriter, r *http.Request) {

// A very simple health check.

w.WriteHeader(http.StatusOK)

w.Header().Set("Content-Type", "application/json")

// In the future we could report back on the status of our DB, or our cache

// (e.g. Redis) by performing a simple PING, and include them in the response.

io.WriteString(w, `{"alive": true}`)

}

And this is our test:

// handlers_test.go

package handlers

import (

"net/http"

"net/http/httptest"

"testing"

)

func TestHealthCheckHandler(t *testing.T) {

// Create a request to pass to our handler. We don't have any query parameters for now, so we'll

// pass 'nil' as the third parameter.

req, err := http.NewRequest("GET", "/health-check", nil)

if err != nil {

t.Fatal(err)

}

// We create a ResponseRecorder (which satisfies http.ResponseWriter) to record the response.

rr := httptest.NewRecorder()

handler := http.HandlerFunc(HealthCheckHandler)

// Our handlers satisfy http.Handler, so we can call their ServeHTTP method

// directly and pass in our Request and ResponseRecorder.

handler.ServeHTTP(rr, req)

// Check the status code is what we expect.

if status := rr.Code; status != http.StatusOK {

t.Errorf("handler returned wrong status code: got %v want %v",

status, http.StatusOK)

}

// Check the response body is what we expect.

expected := `{"alive": true}`

if rr.Body.String() != expected {

t.Errorf("handler returned unexpected body: got %v want %v",

rr.Body.String(), expected)

}

}

As you can see, Go’s testing and

httptest packages make testing our handlers extremely

simple. We construct a *http.Request, a *httptest.ResponseRecorder, and then check how our

handler has responded: status code, body, etc.

If our handler also expected specific query parameters or looked for certain

headers, we could also test those:

// e.g. GET /api/projects?page=1&per_page=100

req, err := http.NewRequest("GET", "/api/projects",

// Note: url.Values is a map[string][]string

url.Values{"page": {"1"}, "per_page": {"100"}})

if err != nil {

t.Fatal(err)

}

// Our handler might also expect an API key.

req.Header.Set("Authorization", "Bearer abc123")

// Then: call handler.ServeHTTP(rr, req) like in our first example.

Further, if you want to test that a handler (or middleware) is mutating the request in a particular

way, you can define an anonymous function inside your test and capture variables from within by

declaring them in the outer scope.

// Declare it outside the anonymous function

var token string

test http.HandlerFunc(func(w http.ResponseWriter, r *http.Request){

// Note: Use the assignment operator '=' and not the initialize-and-assign

// ':=' operator so we don't shadow our token variable above.

token = GetToken(r)

// We'll also set a header on the response as a trivial example of

// inspecting headers.

w.Header().Set("Content-Type", "application/json")

})

// Check the status, body, etc.

if token != expectedToken {

t.Errorf("token does not match: got %v want %v", token, expectedToken)

}

if ctype := rr.Header().Get("Content-Type"); ctype != "application/json") {

t.Errorf("content type header does not match: got %v want %v",

ctype, "application/json")

}

Tip: make strings like application/json or Content-Type package-level constants, so you don’t

have to type (or typo) them over and over. A typo in your tests can cause unintended behaviour,

becasue you’re not testing what you think you are.

You should also make sure to test not just for success, but for failure too: test that your handlers

return errors when they should (e.g. a HTTP 403, or a HTTP 500).

Populating context.Context in Tests

What about when our handlers are expecting data to be passed to them in a

context.Context? How we can create a context and

populate it with (e.g.) an auth token and/or our User type?

Go 1.7 added the Request.Context() method, thus supporting request contexts natively. We’ll use what net/http provides to make our application compatible with as many libraries as we might need in the future.

Note that for the below example, the standard http.Handler and http.HandlerFunc types. Whilst these testing methods are easy enough to ‘port’ to other routers using their own types, the best routers are those that are compatible with Go’s existing interfaces. chi and gorilla/mux are my picks.

func TestGetProjectsHandler(t *testing.T) {

req, err := http.NewRequest("GET", "/api/users", nil)

if err != nil {

t.Fatal(err)

}

rr := httptest.NewRecorder()

// e.g. func GetUsersHandler(ctx context.Context, w http.ResponseWriter, r *http.Request)

handler := http.HandlerFunc(GetUsersHandler)

// Populate the request's context with our test data.

ctx := req.Context()

ctx = context.WithValue(ctx, "app.auth.token", "abc123")

ctx = context.WithValue(ctx, "app.user",

&YourUser{ID: "qejqjq", Email: "user@example.com"})

// Add our context to the request: note that WithContext returns a copy of

// the request, which we must assign.

req = req.WithContext(ctx)

handler.ServeHTTP(rr, req)

// Check the status code is what we expect.

if status := rr.Code; status != http.StatusOK {

t.Errorf("handler returned wrong status code: got %v want %v",

status, http.StatusOK)

}

}

Extending this, we can also test that middleware populating the context does so correctly:

// e.g. middleware.go

func RequestIDMiddleware(h http.Handler) http.Handler {

fn := func(w http.ResponseWriter, r *http.Request) {

// More correctly, we'd use a const key of type struct{} and a random ID via

// crypto/rand.

ctx := context.WithValue(r.Context(), "app.req.id", "12345")

h.ServeHTTP(w, r.WithContext(ctx))

}

return http.HandlerFunc(fn)

}

// e.g. middleware_test.go

func TestPopulateContext(t *testing.T) {

req, err := http.NewRequest("GET", "/api/users", nil)

if err != nil {

t.Fatal(err)

}

testHandler := http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

if val, ok := r.Context().Value("app.req.id").(string); !ok {

t.Errorf("app.req.id not in request context: got %q", val)

}

})

rr := httptest.NewRecorder()

// func RequestIDMiddleware(h http.Handler) http.Handler

// Stores an "app.req.id" in the request context.

handler := RequestIDMiddleware(testHandler)

handler.ServeHTTP(rr, req)

}

Running go test in our package should see this pass. The inverse of this approach is also useful - e.g. testing that admin tokens aren’t incorrectly applied to the wrong users or contexts aren’t passing the wrong values to wrapped handlers.

Mocking Database Calls

Our handlers expect that we pass them a datastore.ProjectStore (an interface type) with three

methods (Create, Get, Delete). We want to stub this for testing so that we can test that our

handlers (endpoints) return the correct status codes.

You should read this Thoughtbot

article and this article from

Alex Edwards if

you’re looking to use interfaces to abstract access to your database.

// handlers_test.go

package handlers

// Throws errors on all of its methods.

type badProjectStore struct {

// This would be a concrete type that satisfies datastore.ProjectStore.

// We embed it here so that our goodProjectStub type has all the methods

// needed to satisfy datastore.ProjectStore, without having to stub out

// every method (we might not want to test all of them, or some might be

// not need to be stubbed.

*datastore.Project

}

func (ps *projectStoreStub) CreateProject(project *datastore.Project) error {

return datastore.NetworkError{errors.New("Bad connection"}

}

func (ps *projectStoreStub) GetProject(id string) (*datastore.Project, error) {

return nil, datastore.NetworkError{errors.New("Bad connection"}

}

func TestGetProjectsHandlerError(t *testing.T) {

var store datastore.ProjectStore = &badProjectStore{}

// We inject our environment into our handlers.

// Ref: http://elithrar.github.io/article/http-handler-error-handling-revisited/

env := handlers.Env{Store: store, Key: "abc"}

req, err := http.NewRequest("GET", "/api/projects", nil)

if err != nil {

t.Fatal(err)

}

rr := httptest.Recorder()

// Handler is a custom handler type that accepts an env and a http.Handler

// GetProjectsHandler here calls GetProject, and should raise a HTTP 500 if

// it fails.

handler := Handler{env, GetProjectsHandler)

handler.ServeHTTP(rr, req)

// We're now checking that our handler throws an error (a HTTP 500) when it

// should.

if status := rr.Code; status != http.StatusInternalServeError {

t.Errorf("handler returned wrong status code: got %v want %v"

rr.Code, http.StatusOK)

}

// We'll also check that it returns a JSON body with the expected error.

expected := []byte(`{"status": 500, "error": "Bad connection"}`)

if !bytes.Equals(rr.Body.Bytes(), expected) {

t.Errorf("handler returned unexpected body: got %v want %v",

rr.Body.Bytes(), expected)

}

This was a slightly more complex example—but highlights how we might:

- Stub out our database implementation: the unit tests in

package handlers should not need a

test database.

- Create a stub that intentionally throws errors, so we can test that our

handlers throw the right status code (e.g. a HTTP 500) and/or write the

expected response.

- How you might go about creating a ‘good’ stub that returns a (static)

*datastore.Project and test that (for example) we can marshal it as JSON.

This would catch the case where changes to the upstream type might cause

it to be incompatible with encoding/json.

What Next?

This is by no means an exhaustive guide, but it should get you started. If you’re stuck building a

more complex example, then ask over on the Gophers Slack

community, or take a look at the packages that import

httptest via GoDoc.